Publications

List of my publications in recent year. You can also check my google scholar page here.

My Google Scholar Page.2025

- Transferable Variational Feedback Network for Vendor and Sequence Generalization in Accelerated MRIRiti Paul, Sahil Vora, Pak Lun Kevin Ding, and 4 more authorsBig Data Mining and Analytics, 2025

Magnetic Resonance Imaging (MRI) serves as a crucial diagnostic tool in medical practice, yet its lengthy acquisition times pose challenges for patient comfort and clinical efficiency. Current deep learning approaches for accelerating MRI acquisition face difficulties in managing data variability caused by different scanner vendors or imaging protocols. This research investigates the use of transfer learning in variational deep learning models to enhance generalization capabilities. We collect 135 ACR phantom samples from 3.0T GE and SIEMENS MRI scanners, following standard ACR guidelines, to study vendor-specific generalization. Additionally, the fastMRI brain dataset, a recognized benchmark for MRI acceleration, is utilized to evaluate performance across diverse acquisition sequences. Through comprehensive testing, we identify vendor and sequence inconsistencies as key hurdles for accelerated MRI generalization. To overcome these challenges, we introduce a feature refinement-based transfer learning method, achieving significant gains over baseline models in both vendor and sequence generalization tasks. Moreover, we incorporate experience replay to mitigate catastrophic forgetting, resulting in notable performance stability. For vendor generalization, our approach reduces Peak Signal Noise-to-Ratio (PSNR) and Structural SIMilarity (SSIM) degradation by 25.55% and 9.5%, respectively. Similarly, for sequence transfer, forgetting is reduced by 3.5% (PSNR) and 2% (SSIM), establishing a robust framework with substantial improvements.

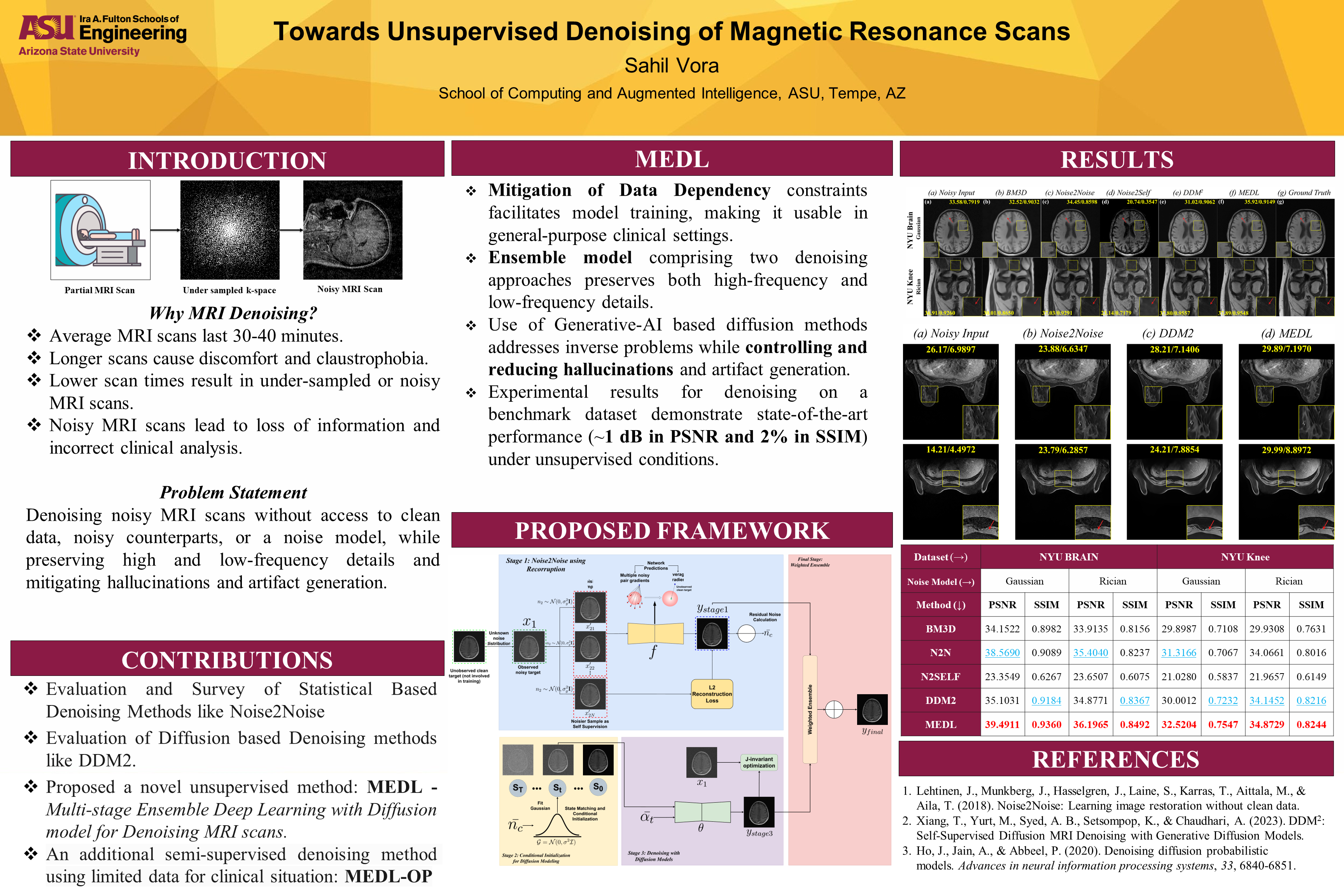

- MEDL: Unsupervised Multi-Stage Ensemble Deep Learning With Diffusion Models for Denoising MRI ScansSahil Vora, Riti Paul, Pak Lun Kevin Ding, and 5 more authorsIn 2025 IEEE 22nd International Symposium on Biomedical Imaging (ISBI), 2025

Image denoising is a persistent challenge, often relying on paired datasets or known noise distributions. While deep neural networks are effective, they are impractical for medical applications like Magnetic Resonance Imaging (MRI) due to their dependency on paired datasets and struggle with unknown noise distributions. Recent advances in self-supervised techniques and diffusion models allow denoising from a single noisy image, but current methods struggle with over-smoothing and hallucination, limiting clinical applicability. To address these issues, we propose a Multi-stage Ensemble Deep Learning framework that capitalizes on the power of diffusion models and operates independently of signal or noise priors. By rescaling multi-stage reconstructions, it balances smoothing and hallucination. Evaluations show a 1dB and 2.5% improvement in PSNR and SSIM over the best existing methods, with promising results for real clinical MRI scans.

- A-FSL: Adaptive Few-Shot Learning via Task-Driven Context Aggregation and Attentive Feature RefinementRiti Paul, Sahil Vora, Nupur Thakur, and 1 more authorIn Pattern Recognition, 2025

Learning new categories with limited training samples presents a significant challenge for conventional deep learning frameworks. The few-shot learning (FSL) paradigm emerges as a potential solution to address practical constraints in this challenge. The primary difficulties in FSL are insufficient prior knowledge and ineffective alignment of clusters to their corresponding classification vectors in the pretrained feature space. While many FSL methods employ task-agnostic instances and class-specific embedding functions, we argue that incorporating task-specific knowledge is crucial for overcoming FSL challenges. To achieve adaptability in FSL, we propose an Adaptive Few-Shot Learning (A-FSL) framework which (1) aggregates task-specific knowledge and adapts the classification vectors in the pretrained feature space and (2) develops a query class correlation attention module to enhance cluster formation. By considering task-specific information at multiple scales of visual features, we can overcome the limitations of a fixed feature space and refine it to adapt classification and query vectors effectively. The A-FSL framework leads to well-formed clusters for novel classes where classification vectors are drawn toward the clusters, even in the 1-shot setting. Through comprehensive experimental evaluation, we show that our method outperforms the current state-of-the-art on benchmark datasets.

2024

-

Towards Unsupervised Denoising of Magnetic Resonance ImagingSahil Vora2024Master Thesis for completion of Master of Science in Computer Science

Towards Unsupervised Denoising of Magnetic Resonance ImagingSahil Vora2024Master Thesis for completion of Master of Science in Computer ScienceImage denoising, a fundamental task in computer vision, poses significant challenges due to its inherently inverse and ill-posed nature. Despite advancements in traditional methods and supervised learning approaches, particularly in medical imaging such as Medical Resonance Imaging (MRI) scans, the reliance on paired datasets and known noise distributions remains a practical hurdle. Recent progress in noise statistical independence theory and diffusion models has revitalized research interest, offering promising avenues for unsupervised denoising. However, existing methods often yield overly smoothed results or introduce hallucinated structures, limiting their clinical applicability. This thesis tackles the core challenge of progressing towards unsupervised denoising of MRI scans. It aims to retain intricate details without smoothing or introducing artificial structures, thus ensuring the production of high-quality MRI images. The thesis makes a three-fold contribution: Firstly, it presents a detailed analysis of traditional techniques, early machine learning algorithms for denoising, and new statistical-based models, with an extensive evaluation study on self-supervised denoising methods highlighting their limitations. Secondly, it conducts an evaluation study on an emerging class of diffusion-based denoising methods, accompanied by additional empirical findings and discussions on their effectiveness and limitations, proposing solutions to enhance their utility. Lastly, it introduces a novel approach, Unsupervised Multi-stage Ensemble Deep Learning with diffusion models for denoising MRI scans (MEDL). Leveraging diffusion models, this approach operates independently of signal or noise priors and incorporates weighted rescaling of multi-stage reconstructions to balance over-smoothing and hallucination tendencies. Evaluation using benchmark datasets demonstrates an average gain of 1dB and 2% in PSNR and SSIM metrics, respectively, over existing approaches.

-

Transferable Variational Feedback Network for Vendor Generalization in Accelerated MRIRiti Paul, Sahil Vora, Kevin Pak Lun Ding, and 4 more authorsIn International Conference on AI in Healthcare, 2024

Transferable Variational Feedback Network for Vendor Generalization in Accelerated MRIRiti Paul, Sahil Vora, Kevin Pak Lun Ding, and 4 more authorsIn International Conference on AI in Healthcare, 2024Magnetic Resonance Imaging (MRI) is a widely used diagnostic tool in medicine. The long acquisition time of MRI remains to be a practical concern, leading to suboptimal patient experiences. Existing deep learning models for fast MRI acquisition struggle to handle the problem of data heterogeneity due to scanners from different vendors. This study explores the transfer learning capabilities of variational deep learning architectures to address this problem. Using standard ACR protocols, we acquired 135 ACR phantom samples from GE and Siemens 3.0T MR scanners and conducted comprehensive experiments to compare the reconstruction quality of the images produced by different models. Our experiments identified vendor differences as a major challenge in the generalization of accelerated MRI. We propose a feature refinement-based transfer learning method that outperforms the baseline networks by 2.0 dB (PSNR), 1.8% (SSIM) for GE, and 3.0 dB (PSNR), 0.8% (SSIM) for SIEMENS. Furthermore, we used experience replay to address the problem of catastrophic forgetting. We established it as a robust baseline through experiments with strong results (PSNR and SSIM performance drop reduced by 25.55% and 9.5%, respectively).

@inproceedings{paul2024transferable, title = {Transferable Variational Feedback Network for Vendor Generalization in Accelerated MRI}, author = {Paul, Riti and Vora, Sahil and Ding, Kevin Pak Lun and Patel, Ameet and Hu, Leland and Li, Baoxin and Zhou, Yuxiang}, booktitle = {International Conference on AI in Healthcare}, pages = {48--63}, year = {2024}, organization = {Springer}, }

2023

-

Instance Adaptive Prototypical Contrastive Embedding for Generalized Zero Shot LearningRiti Paul, Sahil Vora, and Baoxin Li2023Accepted in IJCAI 2023 Workshop on Generalizing from Limited Resources in the Open World

Instance Adaptive Prototypical Contrastive Embedding for Generalized Zero Shot LearningRiti Paul, Sahil Vora, and Baoxin Li2023Accepted in IJCAI 2023 Workshop on Generalizing from Limited Resources in the Open WorldGeneralized zero-shot learning(GZSL) aims to classify samples from seen and unseen labels, assuming unseen labels are not accessible during training. Recent advancements in GZSL have been expedited by incorporating contrastive-learning-based (instance-based) embedding in generative networks and leveraging the semantic relationship between data points. However, existing embedding architectures suffer from two limitations: (1) limited discriminability of synthetic features’ embedding without considering fine-grained cluster structures; (2) inflexible optimization due to restricted scaling mechanisms on existing contrastive embedding networks, leading to overlapped representations in the embedding space. To enhance the quality of representations in the embedding space, as mentioned in (1), we propose a margin-based prototypical contrastive learning embedding network that reaps the benefits of prototype-data (cluster quality enhancement) and implicit data-data (fine-grained representations) interaction while providing substantial cluster supervision to the embedding network and the generator. To tackle (2), we propose an instance adaptive contrastive loss that leads to generalized representations for unseen labels with increased inter-class margin. Through comprehensive experimental evaluation, we show that our method can outperform the current state-of-the-art on three benchmark datasets. Our approach also consistently achieves the best unseen performance in the GZSL setting.

@misc{paul2023instance, title = {Instance Adaptive Prototypical Contrastive Embedding for Generalized Zero Shot Learning}, author = {Paul, Riti and Vora, Sahil and Li, Baoxin}, year = {2023}, eprint = {2309.06987}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, note = {Accepted in IJCAI 2023 Workshop on Generalizing from Limited Resources in the Open World}, }

2021

-

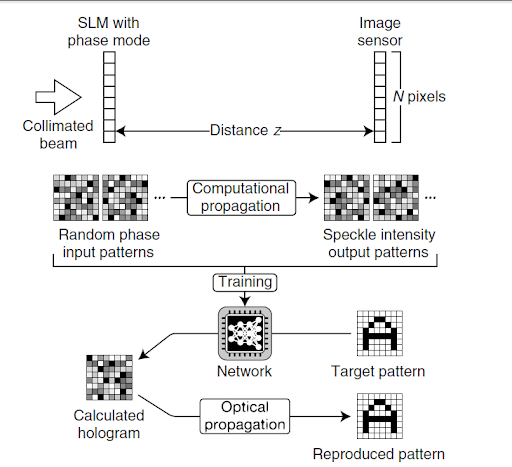

Phase Recovery for Holography using Deep LearningS. VoraInternational Research Journal of Engineering and Technology, Mar 2021

Phase Recovery for Holography using Deep LearningS. VoraInternational Research Journal of Engineering and Technology, Mar 2021Computer-generated holography (CGH) is the strategy for carefully creating holographic interference designs. A hologram is a true account of an interference design that utilizes diffraction to repeat a 3D light field, bringing about a picture that has the depth, parallax, and different properties of the original scene. A holographic picture can be produced for example by carefully registering a holographic interference example and printing it onto a cover or film for ensuing brightening by a reasonable intelligible light source. However, CGH is an iterative technique to register this interference which is time and asset demanding. This paper proposes a technique utilizing deep learning networks that uses a non-iterative calculation which is proficient when contrasted with CGH and galvanizes the plan to utilize this technique to consolidate computer vision and the field of optics

@article{irjet, title = {Phase Recovery for Holography using Deep Learning}, author = {Vora, S.}, journal = {International Research Journal of Engineering and Technology}, volume = {08}, issue = {03}, pages = {577-580}, numpages = {4}, year = {2021}, month = mar, publisher = {Fast Track Publications}, dimensions = {true}, }